I'm planning to do that (with more peripherals), but I'm also planning to cap the 4090 at ~70% TDP.Would a 850W power supply be sufficient to run a PC with a 12900k CPU, a 4090 GPU and almost no peripherals?

|OT| The PC Hardware Thread -- Buy/Upgrade/Ask/Answer

- Thread starter Durante

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

didamangi

Sometimes maybe good, sometimes maybe shit.

Yeah I also saw that. I'm curious what the supply situation will be like.According to the ”notify me” email sent today 03.00 by nvidia, the 4090 FE will be available to purchase today 15.00 CEST.

I'm not in a huge hurry.

Only links to third party models for me, no FE sold through nvidia.comYeah I also saw that. I'm curious what the supply situation will be like.

I'm not in a huge hurry.

As far as I know, for Germany (and Austria) the FE should be available at notebooksbilliger.de , but for now I only see third party models there.

Those have remained in stock for a while now though, so I very muc hdoubt we'll see a repeat of a 30-series situation stock-wise.

Those have remained in stock for a while now though, so I very muc hdoubt we'll see a repeat of a 30-series situation stock-wise.

Do you know if NVIDIA stopped selling the FE through nvidia.com? Hmmm, I am not sure if I should monitor nvidia.com or one of the retailers selling third party modelsAs far as I know, for Germany (and Austria) the FE should be available at notebooksbilliger.de , but for now I only see third party models there.

Those have remained in stock for a while now though, so I very muc hdoubt we'll see a repeat of a 30-series situation stock-wise.

I got my 3090 från nvidia.com as far as I remember. Impossible to get it at launch though, I had to update nvidia.com daily for a couple of weeks to get oneThey certainly did. Unless they restarted it since the 30 series.

I got my 3090 FE from NBB in 2020.

How would you do that, exactly?I'm planning to do that (with more peripherals), but I'm also planning to cap the 4090 at ~70% TDP.

Yeah the 4080 and the can't-believe-it's-not-4070 are very much reduced compared to the 4090. Or alternatively the 4090 is uncommonly far ahead. A bit of both really.

Alt-Z to open the Geforce Experience overlay -> Performance -> Power sliderHow would you do that, exactly?

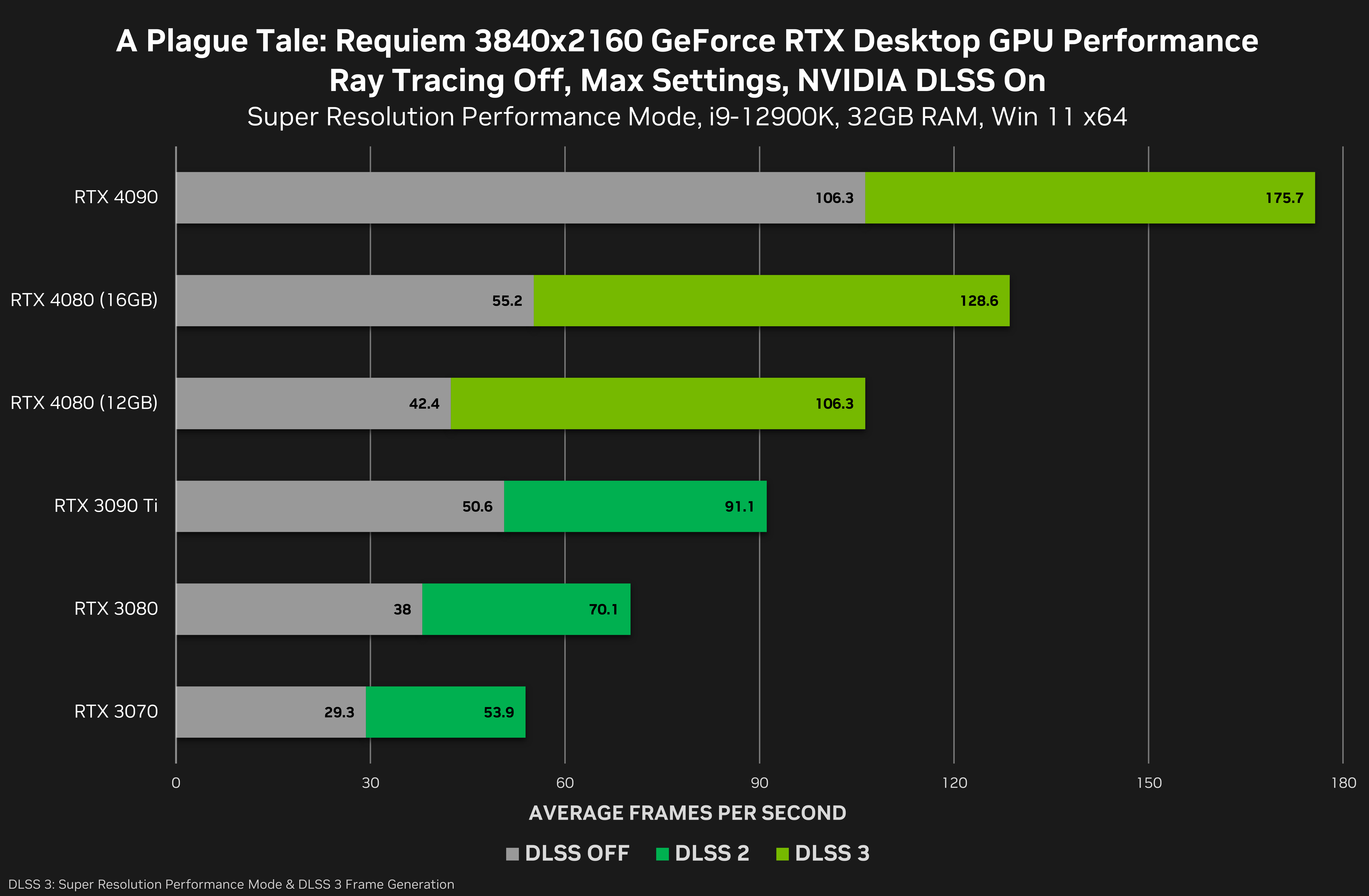

So 4080 and 4080 12 GB are slower than 3080?Nvidia's dropping some 4080 16&12gb benchmark in their blog, performance is way lower than a 4090 in a plague tale requiem.

Most likely via MSI Afterburner, there's a power limit slider.

I mean, they compare DLSS3 to DLSS2, so each DLSS3 result should be cut in half, right?

Why not check the DLSS OFF part of the graph? Looks like the 12GB performs quite similarly in that game. Marginally better than 3080.So 4080 and 4080 12 GB are slower than 3080?

I mean, they compare DLSS3 to DLSS2, so each DLSS3 result should be cut in half, right?

How do the additional 4GB vram give that version of the 4080 an extra 10 fps though? Does the game constantly choke streaming textures or what?

Artifacts aside, anyone tested 30fps to DLSS3 to see how it feels like playing? I imagine it's still crappy for racing and shooters? Or they all tested 60 to 120 etc..?

Last edited:

didamangi

Sometimes maybe good, sometimes maybe shit.

Why not check the DLSS OFF part of the graph? Looks like the 12GB performs quite similarly in that game. Marginally better than 3080.

How do the additional 4GB vram give that version of the 4080 an extra 10 fps though? Does the game constantly choke streaming textures or what?

Artifacts aside, anyone tested 30fps to DLSS3 to see how it feels like playing? I imagine it's still crappy for racing and shooters? Or they all tested 60 to 120 etc..?

The difference between the 16gb and 12gb is more than just vram. It has less cuda, tensor, rt cores and memory bandwidth. Basically what many people are saying is that the 4080 12gb should be a 4070 or even 4060 ti based on its specs.

I think the DLSS off results allow for a good comparison of rasterised performance.So 4080 and 4080 12 GB are slower than 3080?

I mean, they compare DLSS3 to DLSS2, so each DLSS3 result should be cut in half, right?

So 4080 16GB > 3090 TI and "( I can't believe it's not )4070" > 3080

According to a writer for one of the big tech/gaming sites in Sweden (sweclockers.com), via private message on the sweclockers forum, the 4090 founder's edition will not be sold in Sweden ...

This may be of interest for some of you (not available in Sweden though):

409o available through verified priority access - to users who can prove that they own some previous geforce models through geforce exmperience

Will apparently be available in the US, UK, Germany, Netherlands, France, Italy and Spain.

This may be of interest for some of you (not available in Sweden though):

409o available through verified priority access - to users who can prove that they own some previous geforce models through geforce exmperience

Will apparently be available in the US, UK, Germany, Netherlands, France, Italy and Spain.

Last edited:

Nvidia will kill the third party card market then not release their FE cards outside of their usual markets.

Which sounds par for the course for Nvidia.

Which sounds par for the course for Nvidia.

Actually, the whole idea that Nvidia is out to kill AiB partners is silly. Whatever you think of Nvidia, they aren't in the business of killing their own revenue streams, which is what that would do.

I think in a competitive market AiB partners are very useful and nvidia would be stupid to ditch them. If they ever get into a position like apple though where nvidia graphics cards would be their own thing and hard to compare to amd/intel... they're now in a position to just do so if the numbers say it's the right move.

So they decided to unlaunch the 12GB "4080".

So, any bets what they'll rename it to? 4070? 4070Ti? 4080/2 Days? Something else entirely?

So, any bets what they'll rename it to? 4070? 4070Ti? 4080/2 Days? Something else entirely?

didamangi

Sometimes maybe good, sometimes maybe shit.

I think 8K gaming is percent of a percent luxury. Even most 4090 owners won’t be playing games at that resolution.

I mean, let’s be honest, who has space for a TV big enough to ever reap the benefits of 8K? And is there even a point in 8K monitors?

Someone correct me if I’m wrong but surely you won’t even be able to tell the difference between 8K and 4K on most home-sized TVs. We’re talking cinema-size screens before 8K and 4K can be differentiated.

I guess if you want to downsample 8K to 4K you might want to render at that resolution but again, I think I’d rather take the performance than have a microscopic visual enhancement.

I mean, let’s be honest, who has space for a TV big enough to ever reap the benefits of 8K? And is there even a point in 8K monitors?

Someone correct me if I’m wrong but surely you won’t even be able to tell the difference between 8K and 4K on most home-sized TVs. We’re talking cinema-size screens before 8K and 4K can be differentiated.

I guess if you want to downsample 8K to 4K you might want to render at that resolution but again, I think I’d rather take the performance than have a microscopic visual enhancement.

If you care about crazy high PPI on your monitors, 8K might be interesting at anything above 27 inch to come near to 300 PPI. Of course, such a high pixel density is pretty pointless, unless your face face is literally glued to your screen when playing games.I think 8K gaming is percent of a percent luxury. Even most 4090 owners won’t be playing games at that resolution.

I mean, let’s be honest, who has space for a TV big enough to ever reap the benefits of 8K? And is there even a point in 8K monitors?

Someone correct me if I’m wrong but surely you won’t even be able to tell the difference between 8K and 4K on most home-sized TVs. We’re talking cinema-size screens before 8K and 4K can be differentiated.

I guess if you want to downsample 8K to 4K you might want to render at that resolution but again, I think I’d rather take the performance than have a microscopic visual enhancement.

Pricing on Radeon cards is actually pretty decent at the moment, as I found out while looking for a replacement GPU (The one that I got back from ASUS RMA is in a worse condition than I shipped it in, and I have to underclock it by 31% to prevent games from hanging my system). Actually was able to put together a PCPartPicker list with a 6700XT (Currently, you can get one for around $380 new), 32GB of RAM, and a PCIE 4.0 NVME for a little less than a PS5 with the cost of eight years of basic PS Plus (Assuming you pay in 3 month intervals). Granted, that is still a far cry from the days when you could build a PC with console performance for $450-500 back in 2014 or so, but I'd still consider it okay if you look at it under the lens of something that's subsidized from the start (And especially considering the budget and midrange GPU market is kind of dead at the moment).

Ended up going with a new 6900XT, because from what I hear, my 850W PSU isn't gonna cut it for NVIDIA or AMD's upcoming GPUs, and you can get a 3090 right now on the second hand market for ~$900. That card should still be plenty for playing games at 3440x1440 with high refresh rates. That's not to say that the 4090 doesn't have fantastic performance (I've talked to someone who bought one for 3D art stuff), but I feel like it's more meant as a workstation card (offline rendering, video encoding, and AI applications come to mind), and that there's probably far better value elsewhere if you're solely playing games.

I was interested in checking out the Intel Arc A770 (Especially considering we haven't really seen that much benchmarking of how it performs on Linux using DXVK compared to Windows using Microsoft's own compatibility layer for old applications), but it sold out instantly on Newegg. Here's hoping the 4070 doesn't actually cost $900.

Ended up going with a new 6900XT, because from what I hear, my 850W PSU isn't gonna cut it for NVIDIA or AMD's upcoming GPUs, and you can get a 3090 right now on the second hand market for ~$900. That card should still be plenty for playing games at 3440x1440 with high refresh rates. That's not to say that the 4090 doesn't have fantastic performance (I've talked to someone who bought one for 3D art stuff), but I feel like it's more meant as a workstation card (offline rendering, video encoding, and AI applications come to mind), and that there's probably far better value elsewhere if you're solely playing games.

I was interested in checking out the Intel Arc A770 (Especially considering we haven't really seen that much benchmarking of how it performs on Linux using DXVK compared to Windows using Microsoft's own compatibility layer for old applications), but it sold out instantly on Newegg. Here's hoping the 4070 doesn't actually cost $900.

I don't know if it's a known thing, but I have been speculating that nvidia pays partners to offer one of the partner cards at the same price as the FE cards in countries where FE isn't available.Thinking of skipping the FE (not even available in my country).

Asus TUF gaming 4090 seems to be the somewhat sane choice. Big heat sink, not too loud, and the same price as the FE.

The non OC TUF cards were those cards in the 30xx series as well, at least on the high end, which is probably why they were never properly in stock, always some kind of lottery to be allowed to buy those.

Edit: After researching a bit more, the PCB seemed to get some serious criticism for that card, so didn't seem right to pay a price premium for it. I cancelled my order

Edit2: Actually, I was unsuccessful in cancelling and have received a tracking number for delivery. Could still decline this free of charge due to Swedish laws, but will consider what to do over the weekend.

This doesn't seem good, but I guess the risks and faults may be overstated for someone who does not intend to overclock at all?

Reason: Changing circumstances

Last edited:

While it's not great that they are cheaping out a bit on a card with this price, I don't think it will actually matter if you run it without OC (or even better, at 350W power limit for 96% of the performance and much better efficiency).

Also, the 4090 is a lot harder to buy than I initially expected. I haven't even seen any hint of a FE yet.

Also, the 4090 is a lot harder to buy than I initially expected. I haven't even seen any hint of a FE yet.

I would have preferred a FE, but with the official non-availability here in Sweden and the scarcity elsewhere it seems like I would have to pay an unreasonable import premium to get it, while also getting a lesser warrantyWhile it's not great that they are cheaping out a bit on a card with this price, I don't think it will actually matter if you run it without OC (or even better, at 350W power limit for 96% of the performance and much better efficiency).

Also, the 4090 is a lot harder to buy than I initially expected. I haven't even seen any hint of a FE yet.

TUF gaming 4090 would have been nice as a second choice due to the lower price and good PCB for its price, but some people seem to have been affected by coil whine and it is very scarce here due to the lower price

The Gainward 4090 is at least not as expensive as the OC models and does not make a lot of noise with the silent bios setting. Good to hear that the PCB will probably be fine for non-OC use. Also, not having to continue looking is probably worth something, maybe even 200 euros

didamangi

Sometimes maybe good, sometimes maybe shit.

And 4090/12VHPWR connector owners might want to read this anyway if you don't have the time to watch the video:

12VHPWR Cable Guide - CableMod

Thank you for choosing CableMod for your cabling needs! The launch of PCIE5 GPUs with the new 12VHPWR connector is an exciting move to the next generation of graphical power. This new connector can deliver the power required for these power-hungry cards, but also comes with some caveats that...

And 4090/12VHPWR connector owners might want to read this anyway if you don't have the time to watch the video:

12VHPWR Cable Guide - CableMod

Thank you for choosing CableMod for your cabling needs! The launch of PCIE5 GPUs with the new 12VHPWR connector is an exciting move to the next generation of graphical power. This new connector can deliver the power required for these power-hungry cards, but also comes with some caveats that...cablemod.com

So you just get a 4cm long piece of wood and use that to keep the wire straight?

Maybe Nvidia can ship that for future GPUs. Call it "RTX Ultra Support Stick" or something, and put some RGB lights on it or something.

didamangi

Sometimes maybe good, sometimes maybe shit.

Maybe Nvidia can ship that for future GPUs. Call it "RTX Ultra Support Stick" or something, and put some RGB lights on it or something.

You might be on to something here....

I got the Gainward 4090 phantom today. I am happy with it! DLSS3 in Spider-Man feels really smooth, much better than when I tested it on my 3090. I can't wait for the DLSS3 patch in Cyberpunk!

didamangi

Sometimes maybe good, sometimes maybe shit.

With how huge these cards are and the placement of the power connector, it wouldn't surprise me at all if a lot of people got some bent power cables in their cases as a result.

Kind of scary to be honest.

It's getting kinda ridiculous now tbh.

I am wondering if I should do a last CPU/RAM upgrade or if I should wait and do a full sweep next year.

I am on a AM4 board with a 3700X and 16GB RAM. 5800X3D would be the last one I could do, 5800X also looking like a possible buy.

GPU is a 3080.

I would have to do a cooler exchange anyway (Arctic Liquid Freezer Service thing), so why not swap it out if I can find a good price.

I am on a AM4 board with a 3700X and 16GB RAM. 5800X3D would be the last one I could do, 5800X also looking like a possible buy.

GPU is a 3080.

I would have to do a cooler exchange anyway (Arctic Liquid Freezer Service thing), so why not swap it out if I can find a good price.

5800X3D is a gaming beast that goes toe-to-toe with their 7000 series CPUs in some games.

You’d be set for years if you got one of those.

You’d be set for years if you got one of those.

One is ddr4 and other ddr5 .. And 7000 have some kind of gpu on all cpu.. Are there finally boost on all cpu cores or just one at a time ???5800X3D is a gaming beast that goes toe-to-toe with their 7000 series CPUs in some games.

You’d be set for years if you got one of those.

I doubt we’ll see DDR5 benefits for a while. If you really want DDR5 I’d wait a CPU generation or two.One is ddr4 and other ddr5 .. And 7000 have some kind of gpu on all cpu.. Are there finally boost on all cpu cores or just one at a time ???

And it’s not like DDR4 will be obsolete any time soon - you will probably still get good performance out of it right up until the end of the console generation.

If you want integrated GPU then yeah, 7000 series might be a better shout.

I don’t have an answer about the cores. All I know is the 5800X3D punches well above its weight in gaming benchmarks. I think it will be a sought after GPU for years to come.

The DDR5 issue will be for a while the total platform cost. You need a new cpu, mobo and ram. DDR5 is still relatively expensive and at least the AM5 boards so far have been pretty pricey too.

As the the last hurrah for the AM4 platform, the 5800X3D is quite beefy on gaming in particular, games like that big boy cache. I thought about upgrading to it too but 5600X is more than enough for me, for now anyway.

As the the last hurrah for the AM4 platform, the 5800X3D is quite beefy on gaming in particular, games like that big boy cache. I thought about upgrading to it too but 5600X is more than enough for me, for now anyway.

Yeah.. The only benefit is that with IGPU ...20-30% more with ddr5 .. But when they release G series CPU..The DDR5 issue will be for a while the total platform cost. You need a new cpu, mobo and ram. DDR5 is still relatively expensive and at least the AM5 boards so far have been pretty pricey too.

As the the last hurrah for the AM4 platform, the 5800X3D is quite beefy on gaming in particular, games like that big boy cache. I thought about upgrading to it too but 5600X is more than enough for me, for now anyway.

posted in steam thread but looking for a new build soon(?), a little tbd on timescales but within next few months/Q1 2023 I expect

First stab at a new build here

keyboard, mouse, monitor, GPU would be carry overs from current build

Ideally a prebuilt (yes yes yes I kn0w, diy etc etc but no and I'm willing to pay extra if the builder is good)

I expect this is fairly good? Any concerns? Anything that would age fairly quickly?

First stab at a new build here

keyboard, mouse, monitor, GPU would be carry overs from current build

Ideally a prebuilt (yes yes yes I kn0w, diy etc etc but no and I'm willing to pay extra if the builder is good)

I expect this is fairly good? Any concerns? Anything that would age fairly quickly?

I would consider splashing out for a PCI-e Gen 4.0 NVMe.posted in steam thread but looking for a new build soon(?), a little tbd on timescales but within next few months/Q1 2023 I expect

First stab at a new build here

keyboard, mouse, monitor, GPU would be carry overs from current build

Ideally a prebuilt (yes yes yes I kn0w, diy etc etc but no and I'm willing to pay extra if the builder is good)

I expect this is fairly good? Any concerns? Anything that would age fairly quickly?

You won’t see a difference in current games but DirectStorage is on the horizon and you will want to make the most of your IO.

You can get a Ryzen 7 5800X for a little bit more than the 5700X so I would consider that instead.

Thanks, updated the listI would consider splashing out for a PCI-e Gen 4.0 NVMe.

You won’t see a difference in current games but DirectStorage is on the horizon and you will want to make the most of your IO.

You can get a Ryzen 7 5800X for a little bit more than the 5700X so I would consider that instead.

That’s looking like a really solid PC now.

Personally I’d upgrade to a 30 series GPU, but the 2070 Super is a good card so if you’re recycling it from your last build you’ll have a good time with it.

Personally I’d upgrade to a 30 series GPU, but the 2070 Super is a good card so if you’re recycling it from your last build you’ll have a good time with it.

Hi All, Would anyone be able to give some advice on what would be a good TV to replace my OLED55B6V as it has a load of burn in and nothing is shifting it.

I think im going to stick with OLED even though the current one got the burn in, Ive used it a lot for gaming. I'm going to use the new tv just for regular viewing (not games) and keep the old one for gaming.

Looking at current LGs i see the C2 seem like a good model but to be honest there are so many different options out there it all is a bit overwhelming so any advice would be appreciated. Cheers.

I think im going to stick with OLED even though the current one got the burn in, Ive used it a lot for gaming. I'm going to use the new tv just for regular viewing (not games) and keep the old one for gaming.

Looking at current LGs i see the C2 seem like a good model but to be honest there are so many different options out there it all is a bit overwhelming so any advice would be appreciated. Cheers.

Hopefully some of you have an answer. Basically i'm in a mood because i lost a 4TB SSD, it took out my Steam & GOG libraries, ( eh no biggy i lost some screenshots but meh ), i also lost all my modded Bethesda games, again no big problem as i can rebuild them from Wabbajack or Vortex.

However, i had redirected some profile folders so i lost a ton of streams that were unarchived by the streamer and had a tiny audience so they are gone forever probably, screenshots of fun things in those streams, a ton of notes and documents and my latest Firefox bookmarks. Nothing major but there's enough random or obscure stuff gone that i'm grumpy about it. Especially as i was literally going to backup the data the next day.

Anyway, i'm looking to get a 4bay NAS to replace my old 2bay and i'm leaning towards a Synology 920+ but the question i have is do i go for HDD or SSD?

For the same price i can get roughly 4x the capacity in HDD as i can in SSD but having lost both HDDs and an SSD recently i'm asking Meta what they think.

Anything important is recorded in emails etc so that's fine and not a worry. Also the NAS will not be on 24x7 but probably once a week to copy across fresh data, perform system updates etc. My housemate lost his NAS when 2 of his drives died in quick succession so he's set on SSDs for the next one but damn, they're still stupid fucking expensive for home use.

It's a lot of cash but i've had my once-a-decade reminder not to be too complacent about my data.

Any tips, advice, links are welcome but i am leaning towards 4x4TB WD Red Pro HDDs in SHR/RAID5 giving me 12TB usable space.

However, i had redirected some profile folders so i lost a ton of streams that were unarchived by the streamer and had a tiny audience so they are gone forever probably, screenshots of fun things in those streams, a ton of notes and documents and my latest Firefox bookmarks. Nothing major but there's enough random or obscure stuff gone that i'm grumpy about it. Especially as i was literally going to backup the data the next day.

Anyway, i'm looking to get a 4bay NAS to replace my old 2bay and i'm leaning towards a Synology 920+ but the question i have is do i go for HDD or SSD?

For the same price i can get roughly 4x the capacity in HDD as i can in SSD but having lost both HDDs and an SSD recently i'm asking Meta what they think.

Anything important is recorded in emails etc so that's fine and not a worry. Also the NAS will not be on 24x7 but probably once a week to copy across fresh data, perform system updates etc. My housemate lost his NAS when 2 of his drives died in quick succession so he's set on SSDs for the next one but damn, they're still stupid fucking expensive for home use.

It's a lot of cash but i've had my once-a-decade reminder not to be too complacent about my data.

Any tips, advice, links are welcome but i am leaning towards 4x4TB WD Red Pro HDDs in SHR/RAID5 giving me 12TB usable space.

Anyone using a Fractal Design Torrent case? I upgraded to a 5800x3D and have a 6900XT coming on Friday so I decided to swap out my Fractal Design Define R6 for a new airflow case. It sounds like this case will be fairly quiet for an airflow case which is extremely important to me as this is a work PC too. I have heard some worrying things about build quality and cable management so I would love to hear opinions from any owners.